- At WWDC on Monday, Apple subtly touted how much work it’s doing in cutting-edge artificial intelligence and machine learning.

- Unlike most tech companies that use artificial intelligence, Apple does sophisticated computing on its devices instead of relying on the cloud.

- Instead of talking about models and AI technology, Apple’s emphasis on product means that it usually just showcases new features that are quietly enabled by behind-the-scenes AI.

Apple Park is seen before the Worldwide Developer Conference (WWDC) in Cupertino, California on June 5, 2023.

Josh Edelson | AFP | Getty Images

On Monday during Apple’s annual developer conference, WWDC, the company subtly touted how much work it’s doing in cutting-edge artificial intelligence and machine learning.

While Microsoft, Google, and startups like OpenAI embraced cutting-edge machine learning technologies like chatbots and generative AI, Apple seemed to be sitting on the sidelines.

But on Monday, Apple announced several significant AI features, including an improved iPhone autocorrect based on a machine learning program that uses a transformer language model, which is the same technology behind ChatGPT. It will even learn from how users write and type to improve, Apple said.

“Those times when you just want to type a word it ducks, well, the keyboard will learn it too,” said Craig Federighi, Apple’s chief software officer, joking about AutoCorrect’s tendency to use the gibberish word “ducking.” to replace a common expletive.

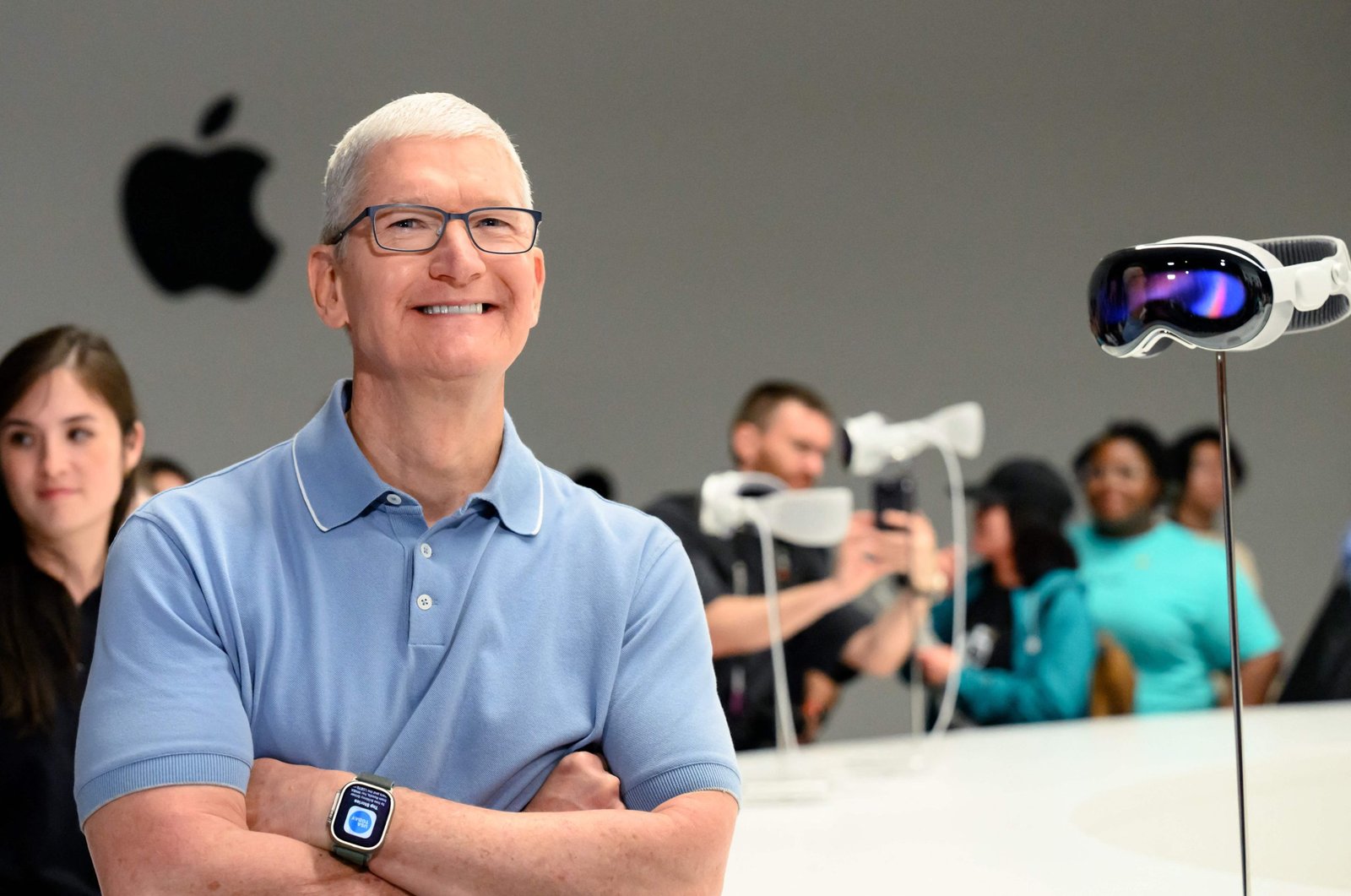

The biggest news on Monday was its cool new augmented reality headset, the Vision Pro, but Apple still showed how it’s working and paying attention to developments in machine learning and cutting-edge artificial intelligence. OpenAI’s ChatGPT may have reached over 100 million users in two months when it launched last year, but now Apple is leveraging the technology to enhance a feature that 1 billion iPhone owners use every day.

Unlike its rivals, who are building bigger models with server farms, supercomputers and terabytes of data, Apple wants AI models on its devices. The new AutoCorrect feature is especially impressive because it runs on the iPhone, while models like ChatGPT require hundreds of expensive GPUs working in tandem.

On-device AI bypasses many of the data privacy issues that cloud-based AI faces. When the model can be run on a phone, Apple has to collect less data to run it.

It’s also closely tied to Apple’s control over its hardware stack, right down to its silicon chips. Apple puts new AI and GPU circuits into its chips every year, and its control over the overall architecture allows it to adapt to changes and new techniques.

Apple doesn’t like to talk about “artificial intelligence”, prefer the more academic phrase “machine learning” or simply talk about the features enabled by technology.

Some of the other major AI companies have leaders from academic backgrounds. This has led to an emphasis on showcasing your work, explaining how it could improve in the future, and documenting it so that other people can study and develop it.

Apple is a product company and has been intensely secretive for decades. Instead of talking about the specific AI model, training data, or how it might improve in the future, Apple simply mentions the feature and says there’s some cool technology at work behind the scenes.

An example of this on Monday was an improvement to AirPods Pro that automatically turns off noise cancellation when the user is in a conversation. Apple didn’t frame this as a machine learning feature, but it’s a tough problem to solve, and the solution relies on AI models.

In one of the bolder features announced on Monday, Apple’s new Digital Persona feature takes a 3D scan of a user’s face and body and then can virtually recreate their appearance when video conferencing with other people while wearing the Vision Pro headset.

Apple also mentioned several other new features that used the company’s skill in neural networks, such as the ability to identify fields to fill in a PDF.

One of the biggest cheers of the afternoon in Cupertino was for a machine learning feature that allows the iPhone to identify your pet compared to other cats or dogs and place all of the user’s pet photos in a folder .

#Apples #handson #approach #artificial #intelligence #bragging #rights #functionality